How do I use the abuse detection functionality with live user content?

The actual mode of integration depends on your requirements and the philosophy of your social network.

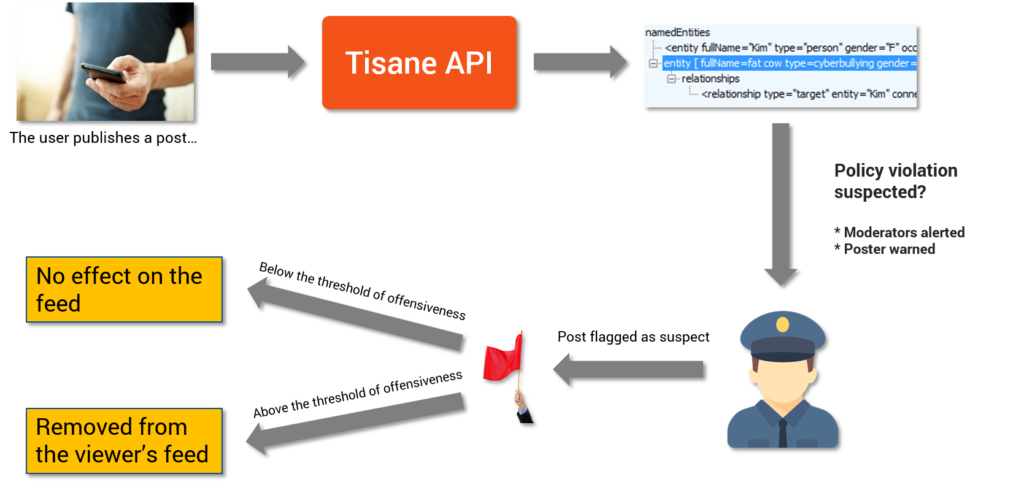

However, a typical integration flow could look like the figure below:

This is how it’s done:

- Tisane API flags suspect posts according to the severity level and the type of abuse (no one forces the social network to act on every alert)

- The poster and the moderators are alerted

- By default, the post is concealed, unless the user deliberately chose to read all posts, including offensive ones

- The moderators may modify the level of offensiveness back

If the system misfires, both the poster and the operator have a way to correct the situation. On the other hand, the potential offenders feel the presence of a system that curbs their aggression.

This kind of architecture ensures a transparent, explainable, and easy to control system with maximum flexibility.