How do I filter by a list of keywords?

While most users use Tisane for context-aware moderation, some users may require simple keyword filtering.

There are two ways to implement it with Tisane. With both routes, the known “clbuttic problem” is avoided.

By default, Tisane outputs only the abuse, sentiment, and entities_summary sections in its response. There is, however, more detail that can be provided, such as the words making up every sentence, or the parse tree (syntactic structure) of the sentence.

To output the words, add "words":true to the settings parameter.

All words are tokenized (divided into chunks); the tokenization algorithm in use depends on the language. It is transparent to the user, whether the language uses spaces or not, has compounds like German or Dutch, or has words that have spaces in between (e.g. kung fu or EE. UU. in Spanish). The result is a structured array named words and located inside the sentence_list structure in the response. Every word element contains:

- the actual string (

text) - the

offsetwhere it starts - a

stopwordflag for stop words - some internal Tisane IDs (discussed later)

- associated features like grammar or style

Route 1: simple (not recommended)

The simple and the obvious solution is:

- Traverse the

wordsarray - For every element, look if the

textattribute contains one of the prohibited words (or expressions, because the tokenization is logical, and kung fu or power plant is one word)

The simplicity comes with drawbacks, of course. What happens if the word is inflected? Some people will say “we can use the stem”, but it’s not always possible to reduce it to a stem. How do we capture the word “bought” in English based on the lemma “buy”? And more morphologically rich languages (French, German, Arabic, Russian, Hindi, etc.) have a lot more variety and numerous inflections.

Then, what happens if the word is obfuscated or, in information security speak, employs “adversarial text manipulations”?

And what if we want to screen any mention of Alaska (the state) but allow Alaska Air and baked Alaska?

Route 2 solves these issues.

Route 2: more powerful

Route 2 relies on Tisane’s internal IDs.

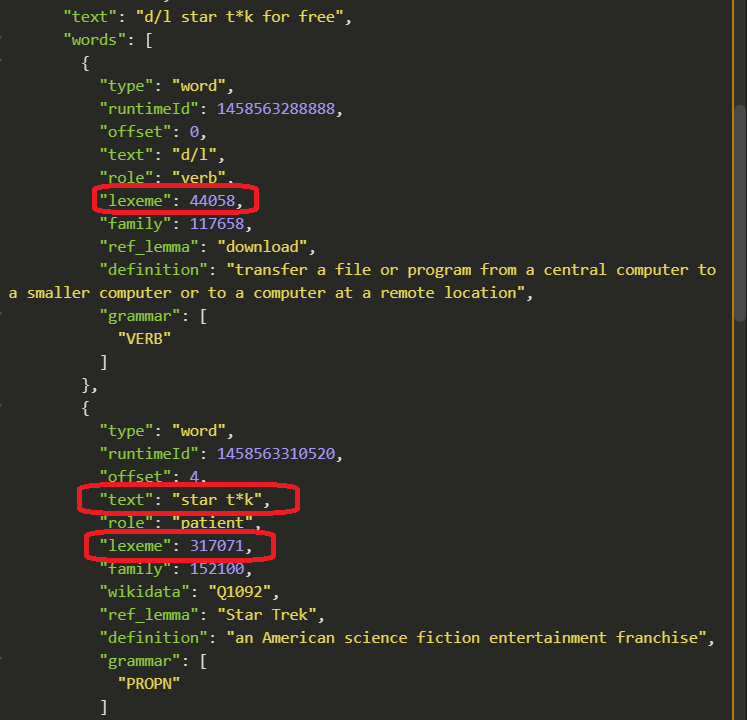

The internal identifiers in the word entries are lexeme and family. A lexeme ID in Tisane is associated with a word and all its possible inflections. If the word is obfuscated (e.g. “br*k” instead of “break”) or misspelled, and Tisane manages to recognize the original word, then the lexeme ID of the original word is provided.

Family ID is another option, if we want to filter according to the word-sense. E.g. a device called “elevator” in the US is called “lift” in the UK. It’s the same real-world entity, just different terms. They both have the same family ID. However, “lift” in the sense of aerodynamic lift, has a different family ID. An added bonus here is that the family IDs are the same across languages. That is, you can create a “list of concepts” to capture, regardless of the language or the dialect. Power uses may even filter by categories (e.g. any kind of plane, any kind of car, any kind of bird while ignoring “clay pigeon” but capturing “pigeon”, etc.).

But, while Tisane is generally word-sense oriented, we came to realize that the difference between word-senses is not always obvious to the users. Also, the keyword filtering usually intentionally ignores the context. This is why we recommend using lexeme ID.

For every word or phrase in your list:

- Look up the lexeme ID, either by running a sample sentence and getting the lexeme ID from there, or by using our Language Model Direct Access API.

- In analysis time, traverse the

wordsarray. - For every word, compare the lexeme ID with the list of your lexeme IDs.